Introduction

Deep learning, a subset of artificial intelligence (AI), is predicated on the concept of emulating the layered structure and function of the human brain to interpret various forms of data. This approach allows machines to identify intricate patterns within images, text, sound, and more, facilitating nuanced insights and reliable predictions. Deep Learning is, more specifically, a specialized subset of machine learning that distinguishes itself primarily through the structure and complexity of its models. Traditional machine learning relies on handcrafted features extracted from data by experts to train algorithms like decision trees or linear regression models. These features are predefined, requiring significant domain expertise and manual intervention to determine the most relevant data points that will lead to accurate predictions or classifications.

In contrast, deep learning automates this feature extraction process. It uses neural networks with multiple layers—hence the term “deep”—that learn to identify features directly from the data. Starting from simple patterns and textures in the initial layers, deep neural networks progressively learn more abstract and complex representations as the data moves through its layers. This hierarchical learning approach enables deep learning models to handle vast arrays of unstructured data like images, audio, and text, which would be prohibitively complex for traditional machine learning models to process without extensive pre-processing.

Another key difference is the scale of data and computational power required. Deep learning models thrive on big data and high-performance computing, such as GPUs, to process the complex array of parameters and weights within their deep architectures. As a result, they can achieve higher accuracy in tasks such as image recognition, natural language processing, and autonomous driving, where the subtle nuances and patterns within the data can be highly significant.

A Brief History of Deep Learning

The foundations of deep learning are attributed to the pioneering work in the 1940s. Over the decades, despite its cyclic rises and falls in research popularity under various monikers, deep learning has been a challenging pursuit. Historically, researchers encountered significant hurdles in training deep models effectively with the computational and data resources then at their disposal, often leading to periods of waned interest as other methods outperformed.

The landscape shifted dramatically in 2006 due to key advancements by Geoffrey Hinton and his team. They introduced a method for efficient training of neural networks with numerous layers—fundamental components of deep learning architectures—via a technique known as “layer-wise training.” This method involved the sequential training of individual layers followed by a comprehensive fine-tuning of the entire network. This innovation sparked a renaissance in the field, enabling not only the refinement of the specific model types they worked on but also the application of the technique to a broader range of deep learning structures. The success of these methods rejuvenated the field and cemented “deep learning” as the term of choice, symbolizing the newfound capability to train profound and complex models that were once beyond reach.

The current efforts have succeeded when the past has failed thanks to three key developments:

- Big Data: The explosion of data from the internet, sensors, and digitalization of records provided the vast amounts of information needed to train deep neural networks.

- Advanced Algorithms: Alongside the earlier breakthroughs with CNNs for pictures and RNNs for time-related data, a big leap came with ‘transformers.’ Introduced in 2017, transformers changed the game by being good at handling data that is spread out and needs to be understood as a whole, like sentences in a conversation. They are smart enough to focus on the most important parts of the data to make better predictions or understandings. This is why they’re the main technology behind AI like ChatGPT. Plus, they work well with the latest fast computer processors, making them a key part of modern AI’s toolbox.

- Computational Power: The advent of powerful GPUs (Graphics Processing Units) and advancements in parallel computing allowed for the training of deep neural networks, which require many computational resources.

These developments have led to impressive applications of deep learning across various domains which have set the groundwork for AI and what machines can do.

What Makes Deep Learning Technology so Sought After?

Different industries are actively using Deep Learning for object detection, features tagging, image analysis, and processing data at high speeds. What differentiates Deep Learning from other AI and Machine Learning technologies is the ability to learn its own patterns from vast amounts of unstructured data.

AI deep learning is highly sought after for several compelling reasons. The Artificial Intelligence Index Report 2023 identified, “Organizations that have adopted AI report realizing meaningful cost decreases and revenue increases.” It marks a significant shift in how machines learn from data and make decisions, leading to groundbreaking advancements. Here are some key factors that contribute to the allure of deep learning:

Unparalleled Pattern Recognition

Deep learning excels in identifying complex patterns in data. It takes on the role of a digital detective, deciphering the twists and turns of data with neural networks that mimic the complexity of the human brain. It starts simple, recognizing basic shapes and textures, then gradually builds up to deciphering intricate features and connections within the data. Think of it like learning a language, starting from alphabets to eventually composing poetry.

Pattern recognition sits at the heart of machine learning, enabling systems to classify and categorize data by learning from past experiences. It’s like giving a machine a history book filled with data patterns instead of historical events; the machine uses this book to recognize and understand new, similar patterns it encounters. Think of it as the machine’s way of saying, “I’ve seen something like this before, so I have a pretty good guess about what it is.” This process is rooted in the creation of algorithms that can take in input data, process it through statistical analysis, and make a decision about what category that data belongs to based on previously gained knowledge.

Shallow learning, as applied in some machine learning systems, focuses on direct, often linear relationships in data. It’s akin to skimming a book: you get the gist of the story but miss the underlying themes and nuanced character development. Such systems might recognize patterns or flag anomalies based on explicit instructions or limited layers of processing, suitable for simpler tasks where the complexities of the data are minimal or already well-defined. Other businesses that offer damage detection may rely on this form of machine learning for its straightforward implementation and interpretation, offering a basic level of insight into structured data sets.

Loveland Innovations, however, harnesses the more sophisticated approach of deep learning. This isn’t just pattern recognition—it’s pattern comprehension. Like diving into a novel and grasping not just the plot but also the subtle metaphors and the author’s intent, deep learning models discern and ‘learn’ the intricacies within vast amounts of unstructured data. It’s a robust method that excels in identifying, interpreting, and predicting complex data patterns, much like how a seasoned critic understands and appreciates literature on a deeper level than a casual reader. While competitors may stop at the surface, Loveland Innovations dives into the depths of data, leveraging the full potential of AI to deliver nuanced, comprehensive insights that go beyond the capabilities of traditional shallow learning techniques.

Automation and Efficiency

Deep learning excels at deciphering the complexities of large datasets, a task that would be arduous, if not impossible, for humans to undertake with the same speed and accuracy. By automating the analysis of data, these systems liberate human workers from the tedium of poring over spreadsheets or scrutinizing thousands of images for minute anomalies. It’s like having a tireless digital workforce dedicated to the meticulous and rapid processing of information, allowing human workers to redirect their efforts to more strategic and creative tasks.

This automation extends beyond data analysis into decision-making and predictive modeling, fields once thought to be the exclusive dominion of human intellect. The efficiency gained from deep learning isn’t just about speed; it’s about the ability to make more informed decisions, reduce errors, and enhance the quality of outputs across services and products. As deep learning continues to evolve, its role as the linchpin of automation and efficiency becomes more clear—transforming digital potential into performance for businesses.

Big Data Handling

In the current digital era, where every click, swipe, and interaction generates a data point, the volume of information is growing at an unprecedented rate. Deep learning algorithms are the powerhouses in this data-rich environment, thriving on the sheer scale of information that would overwhelm traditional analytical methods. The more data these algorithms are fed, the more refined and accurate their predictions and insights become. This affinity for large datasets makes deep learning not just useful, but indispensable for extracting valuable insights from the digital ocean of data we’re creating every second.

Continuous Improvement

The journey of deep learning models towards improvement is not always a straightforward path of continuous enhancement. While these models possess the potential to refine their algorithms with more data, this process is not automatic or guaranteed. It’s not uncommon for these models to become overly fixated on certain data nuances—idiosyncrasies that may not be broadly applicable or predictive of future data. This can lead to a phenomenon known as overfitting, where the model performs well on the training data but fails to generalize to new, unseen data, causing a decline in performance.

Therefore, the advancement of deep learning models is not self-driven but curated. Engineers and data scientists play a crucial role, continuously monitoring, adjusting, and guiding the learning process to prevent the models from veering off course. They employ strategies to ensure that the models do not stagnate or regress but rather maintain their trajectory towards becoming more precise and reliable. This is the art and science of deep learning—balancing the experiences gained from data with the discerning eye of human expertise to foster truly intelligent systems.

Competitive Advantage

Organizations that leverage deep learning stand at the forefront of data-driven decision-making. They can detect nuanced patterns and correlations that human analysts might miss, extracting needles of insight from haystacks of data. The ability to harness and make sense of vast datasets translates directly to a competitive edge in today’s market, ensuring that decisions are informed by the most comprehensive and sophisticated analysis available. As data continues to grow exponentially, deep learning stands as a beacon of potential, guiding businesses and institutions toward more informed, intelligent, and effective decision-making processes.

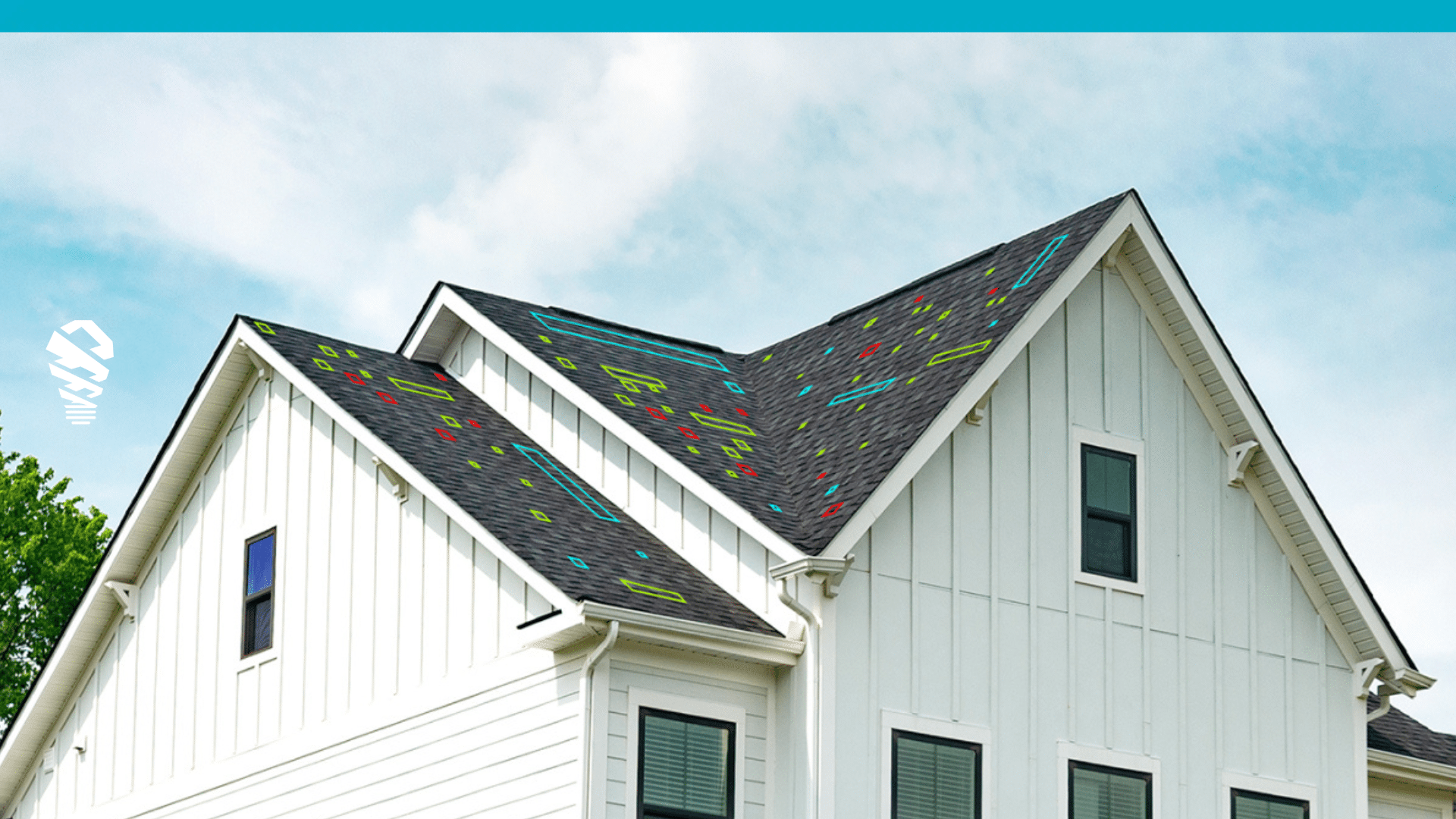

IMGING

Embracing unbiased AI and deep learning for roof inspections epitomizes the technological leap forward in property assessment. With such advanced tools at our disposal, inspectors can illuminate damage with unparalleled clarity and speed, making the meticulous process of manual inspections seem archaic. IMGING’s deep learning framework has the ability to rapidly analyze and highlight roof damage. Not only does this facilitate a faster inspection process, but it also enables inspectors to substantiate their findings with a level of detail and accuracy that sets them apart from the competition. This differentiation can be a decisive factor in winning bids and expanding business opportunities, as clients increasingly seek the most reliable and technologically advanced services available.

Conclusion

Deep learning has catalyzed a shift in the way we approach damage detection and many other complex tasks. By simulating the intricate architecture of the human brain, this technology has brought about a revolution in pattern recognition, automated efficiency, and big data analysis, driving continuous improvement through meticulous data interaction. The rigorous process of fine-tuning and evolving these models underscores the synergy between human expertise and machine learning—a collaboration that enhances decision-making capabilities and fosters a competitive advantage across industries. Particularly in applications like IMGING Detect, deep learning transcends traditional methods, offering speed, accuracy, and clarity that redefine industry standards. As we continue to generate data at an extraordinary rate, deep learning stands as a cornerstone of innovation, promising to illuminate insights and forge pathways to progress that were once unachievable.